Co-founder and CTO of

Deepwater Robotics,

a startup developing

autonomous underwater vehicles that will be deployed at an unprecedented scale

to explore and monitor our oceans.

Co-founder and CTO of

Deepwater Robotics,

a startup developing

autonomous underwater vehicles that will be deployed at an unprecedented scale

to explore and monitor our oceans.

I completed my PhD studies at the University of Toronto where

my research focused on developing

probabilistic machine learning algorithms that can scale to large and complicated problems

(thesis).

I also developed a

new machine learning course for robotics engineering which I instructed for three years at the University of Toronto.

During my graduate studies I published and reviewed articles at machine learning venues

such as NeurIPS, ICML, and AISTATS.

With AeroVelo Inc., I performed the structural design and

optimization of a

human-powered helicopter

that claimed the Igor I. Sikorsky prize;

one of the largest monetary prize in aviation history and which had stood unclaimed for 33 years.

I was also one of the pilots of the helicopter and held the FAI world endurance flight record.

Following that project I performed the aerodynamic shape optimization of a

high-speed bicycle

that broke the world human-powered speed record by travelling at 144.2 km/hr (89.6 mph) on a flat highway.

In machine learning, I’d spent several years delivering AI tools for industry;

for four years I led the development of a

machine learning library used by Pratt & Whitney,

one of the world’s largest aircraft jet-engine manufacturers.

Deepwater Robotics

Created with my co-founder Dr. Calvin Moes,

Deepwater Robotics

is building a global fleet of compact autonomous underwater vehicles (AUVs) that we will operate throughout the world’s oceans.

Our goal is to provide sub-meter resolution surveys covering the entire ocean once every decade.

There are a lot of decisions that need to be made - and soon - about how to manage our oceans,

and we intend to be the indisputable leaders in providing data to back those decisions.

Infera AI

I’m a co-founder and the chief scientist of Infera AI which

takes a statistically rigorous approach to machine learning to support a range

of industrial AI applications such as making confident quality assurance assessments,

monitoring of machinery health, and performing process optimization and control

even when little or no data has been previously collected.

At Infera, we continue to perform leading machine learning research to help innovate

industrial solutions to increase competitiveness of our industry partners,

accelerate AI adoption, and to reduce expensive and time-consuming operations.

Learn more at infera.ai

Research

My research interests lie in the following areas:

probabilistic machine learning,

learning continuous-time dynamical systems,

physics informed priors,

discrete variable models, and

onboard machine learning on devices with limited computing resources.

I am also interested in various aspects of scientific computing,

including the

acceleration of numerical algorithms using

stochastic methods,

large-scale linear algebra, and

deep invertable maps.

Below I’ve included a list of my selected publications and theses.

[Google Scholar]

[Github]

Advances in Scalable Bayesian Inference: Gaussian Processes & Discrete Variable Models

Trefor W. Evans

Trefor W. Evans

University of Toronto, 2022.

[PhD thesis download (.pdf)]

My PhD thesis presents advances in probabilistic machine learning in the areas of Gaussian processes

and discrete variable models.

Gaussian processes (GPs) are exactly the models we would like to use for modern machine learning tasks;

they are non-parametric models whose capacity naturally adapts to the quantity of training data,

are highly interpretable,

offer powerful opportunities to incorporate prior knowledge, and

they deal with uncertainty due to lack of data in a rigorous manner through Bayesian inference.

Unfortunately, the generic algorithm for GP training and inference scales with

O(n^3) time and O(n^2) storage on a problem with n training observations.

Given present-day computational resources, this scaling makes GPs struggle

to scale beyond modestly sized datasets.

This thesis explores approaches to scale Gaussian process training and inference to

large datasets without sacrificing the benefits of these models.

Specifically, I present theoretical analyses alongside algorithmic advances

in GP modelling and inference for regression and classification problems.

Quadruply Stochastic Gaussian Processes

Trefor W. Evans and Prasanth B. Nair.

[Preprint]

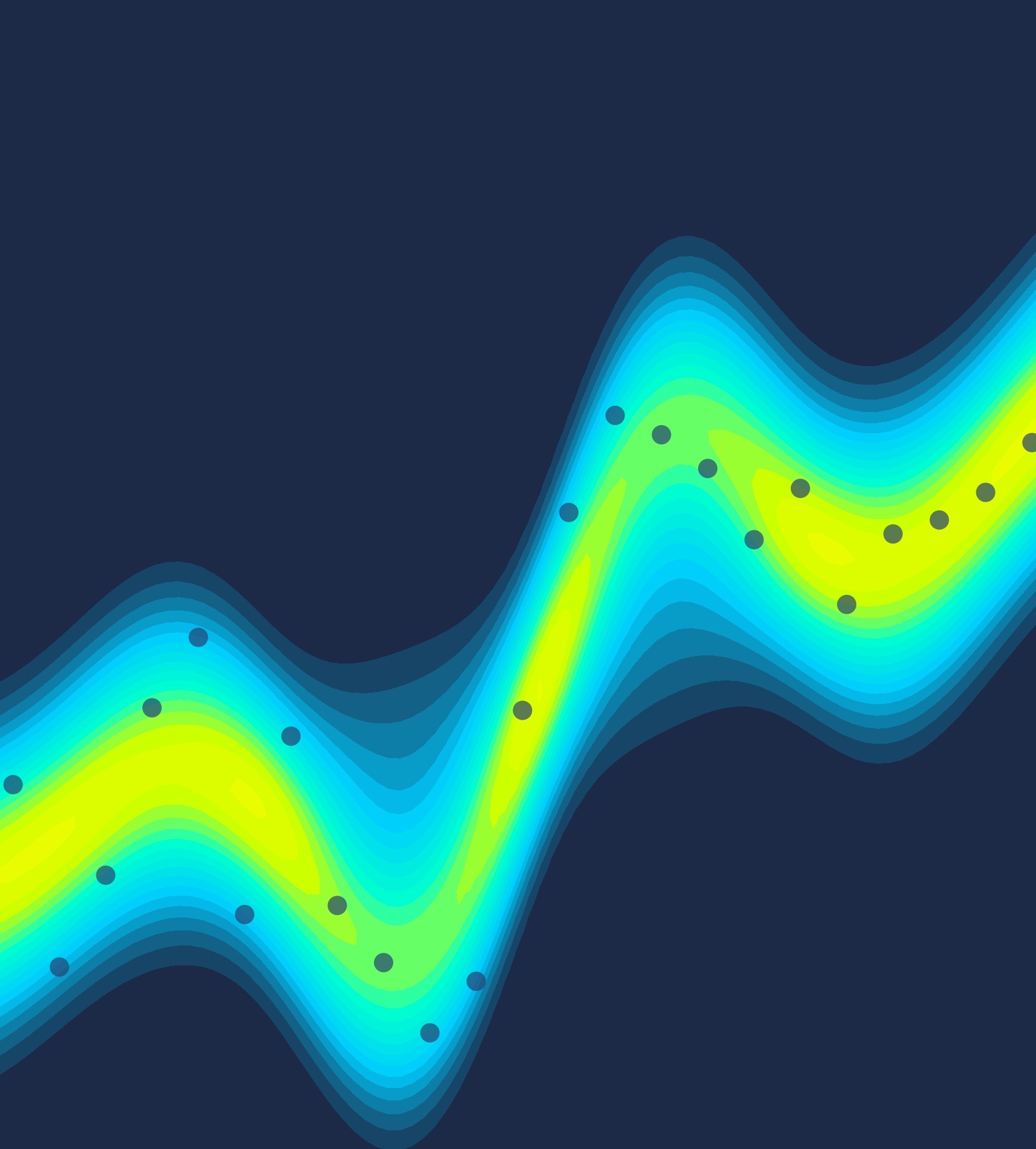

We introduce a stochastic variational inference procedure for training scalable Gaussian process (GP) models whose per-iteration

complexity is independent of both the number of training points, n, and the number basis functions used in the kernel approximation, m.

Our central contributions include an unbiased stochastic estimator of the evidence lower bound (ELBO) for a Gaussian likelihood,

as well as a stochastic estimator that lower bounds the ELBO for several other likelihoods such as Laplace and logistic.

Independence of the stochastic optimization update complexity on n and m enables inference on huge datasets using large capacity GP models.

We demonstrate accurate inference on large classification and regression datasets using GPs and relevance vector machines with up to m=107 basis functions.

Weak Form Generalized Hamiltonian Learning

Kevin L. Course, Trefor W. Evans and Prasanth B. Nair.

Kevin L. Course, Trefor W. Evans and Prasanth B. Nair.

NeurIPS, 2020

[Paper]

[Code]

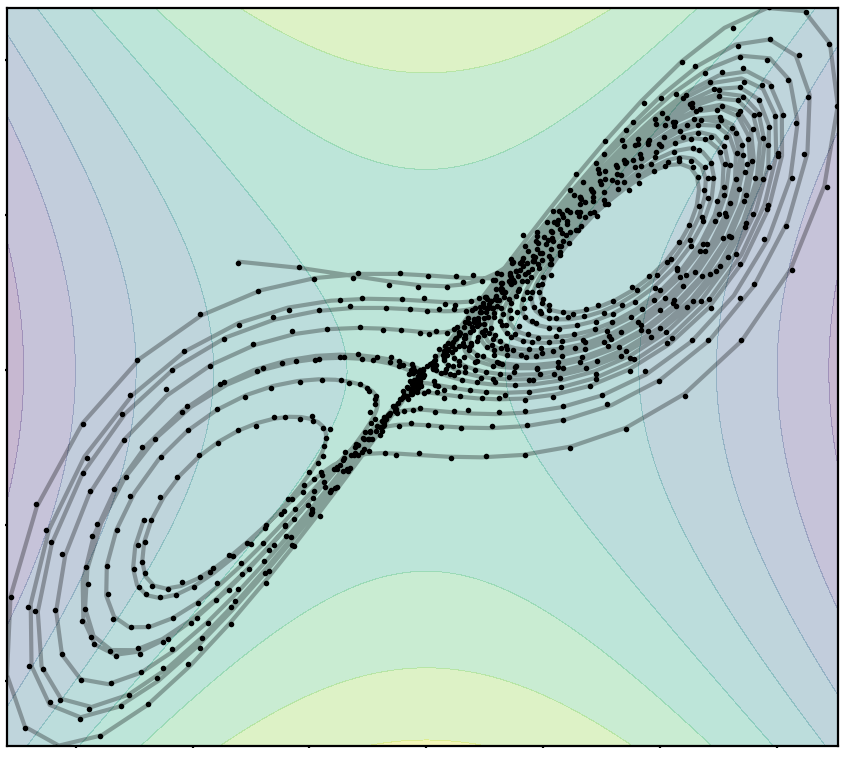

We present a method for learning generalized Hamiltonian decompositions of ordinary differential equations given a

set of noisy time series measurements.

Our method simultaneously learns a continuous time model and a scalar energy function for a general dynamical system.

Learning predictive models in this form allows one to place strong, high-level, physics inspired priors onto the form of the learnt

governing equations for general dynamical systems.

Moreover, having shown how our method extends and unifies some previous work in deep learning with physics inspired priors,

we present a novel method for learning continuous time models from the weak form of the governing equations which

is less computationally

taxing than standard adjoint methods.

Discretely Relaxing Continuous Variables for tractable Variational Inference

Trefor W. Evans and Prasanth B. Nair.

Trefor W. Evans and Prasanth B. Nair.

NeurIPS, 2018 (spotlight paper)

[Paper]

[Code]

[Video (3 min)]

[Slides]

[Poster]

[NeurIPS Spotlight]

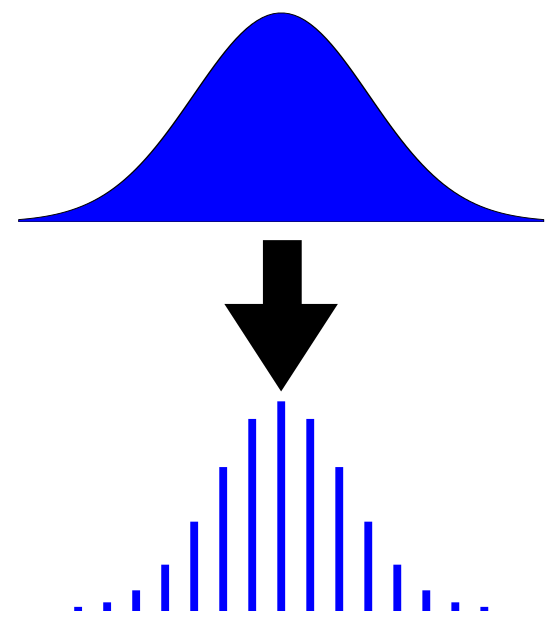

We explore a new research direction in variational inference with discrete latent variable priors.

The proposed “DIRECT” approach

can exactly compute ELBO gradients,

its training complexity is independent of the number of training points, and

its posterior samples consist of sparse and low-precision quantized integers.

Using latent variables discretized as extremely low-precision 4-bit quantized integers, we

demonstrate accurate inference on datasets with over two-million points where training takes just seconds.

Scalable Gaussian Processes with Grid-Structured Eigenfunctions (GP-GRIEF)

Trefor W. Evans and Prasanth B. Nair.

Trefor W. Evans and Prasanth B. Nair.

ICML, 2018 (long talk)

[Paper]

[Code]

[Slides]

[Poster]

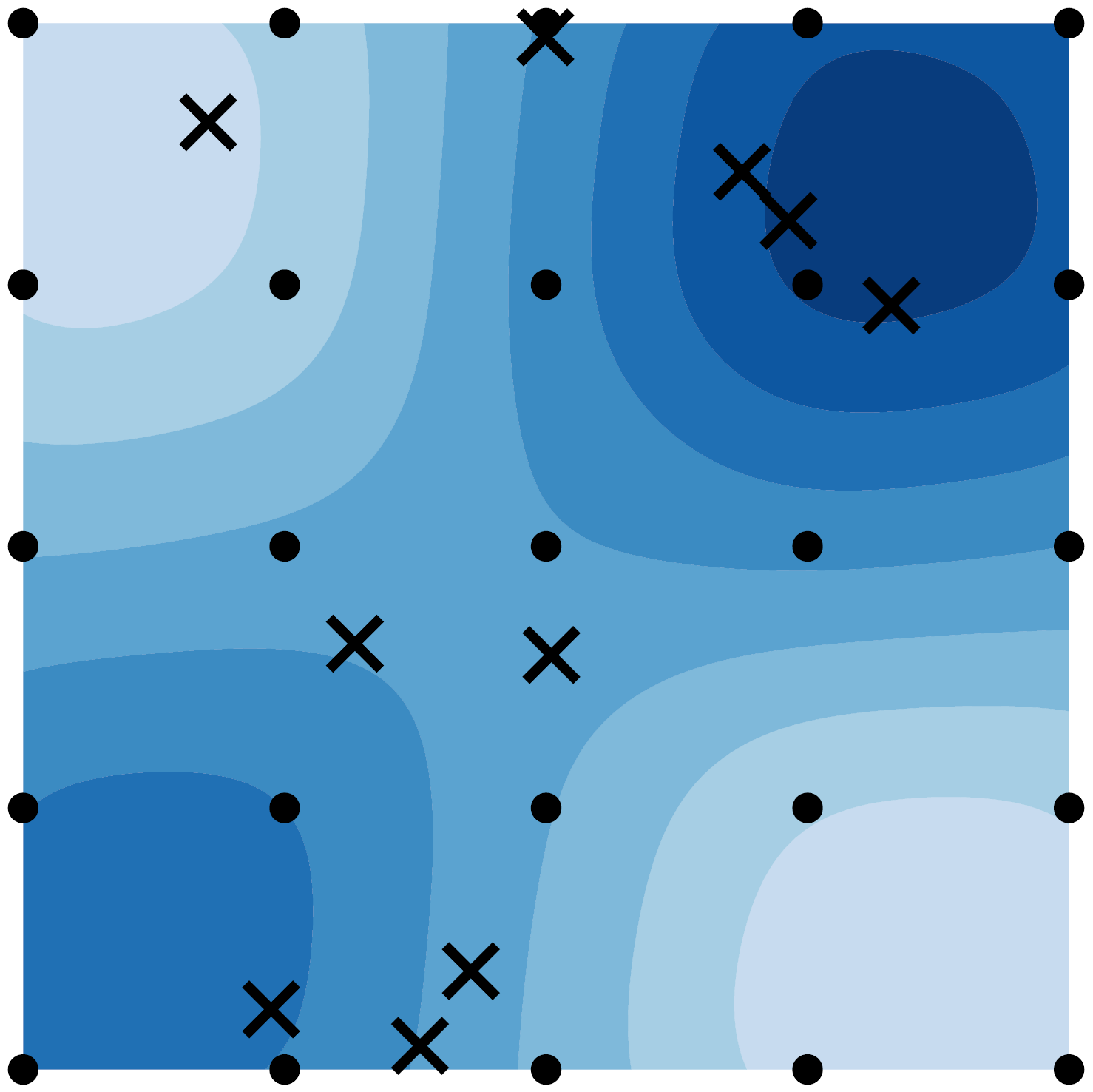

We introduce a kernel approximation strategy that enables computation of the Gaussian Process log marginal likelihood

and all hyperparameter derivatives in O(p) time,

where p is the rank of our approximation.

This fast likelihood evaluation enables type-I or II Bayesian inference on large-scale datasets.

We benchmark our algorithms on real-world problems with up to two-million training points and 1033 inducing points.

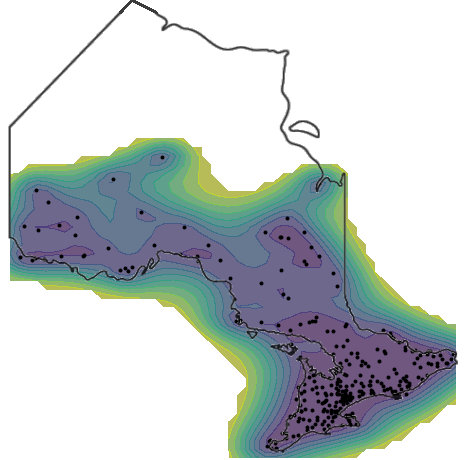

Exploiting Structure for Fast Kernel Learning

Trefor W. Evans and Prasanth B. Nair.

Trefor W. Evans and Prasanth B. Nair.

SIAM International Conference on Data Mining (SDM), 2018.

[Paper]

[Code]

[Slides]

[Poster]

We introduce two new approaches to perform exact Gaussian Process (GP) inference on massive image, video,

spatial-temporal, or multi-output datasets

with missing observations.

We demonstrate exact GP inference for a spatial-temporal climate modelling problem with 3.7 million training points

as well as a video reconstruction problem with 1 billion points;

to the best of our knowledge exact GP inference has not been attempted before on this scale.

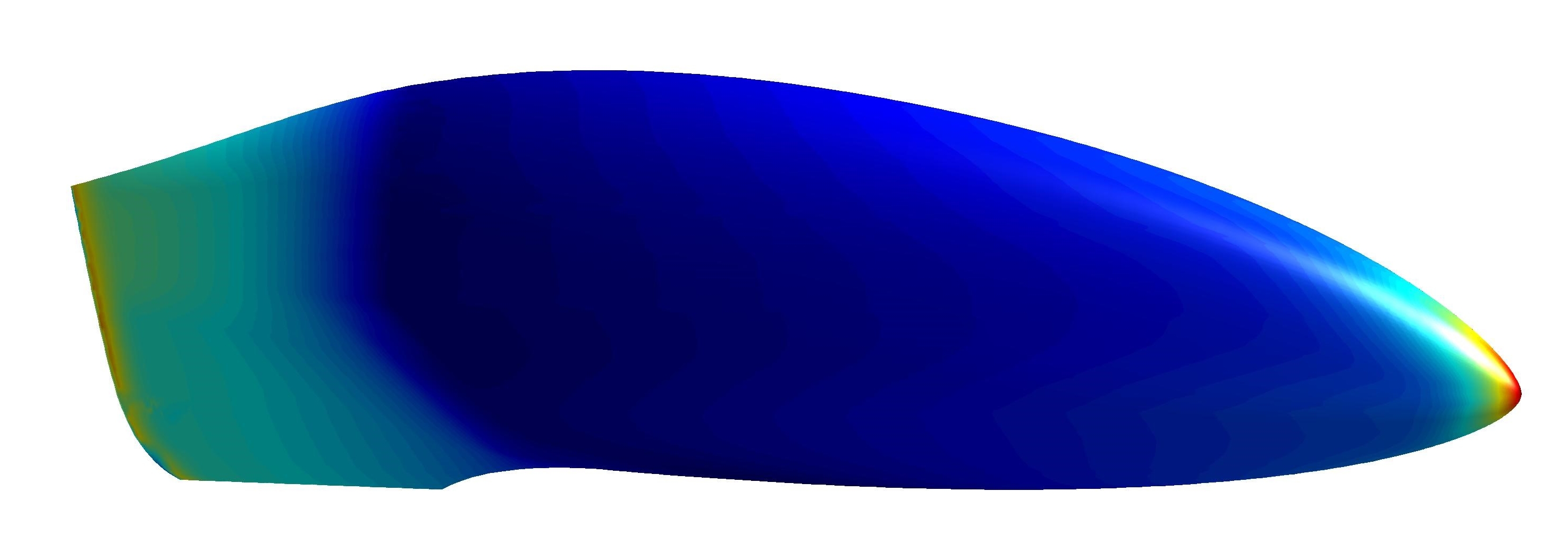

Undergraduate Thesis

Trefor W. Evans

Trefor W. Evans

University of Toronto, Engineering Science, 2014.

[Undergraduate thesis (.pdf)]

[Blog Post]

Developed a practical inverse aerodynamic shape optimization design process to achieve highly laminar flow bodies with an optimal pressure recovery.

Using this process, I designed Eta, a vehicle that I later built with AeroVelo, and which currently holds the world speed record at 144.17km/hr on a flat road.

Checkout

the this video of one of our record-breaking runs, and

the AeroVelo project page.

Teaching

Instructor, Introduction to Learning from Data (ROB313)

[Syllabus] [Introduction to Bayesian inference]

This is a new course at the University of Toronto that I developed in 2019. It is an introductory machine learning course offered by the Division of Engineering Science for 3rd year students in the Robotics Engineering major. Aside from developing and delivering lectures, I managed two great teaching assistants, created interactive demos and tutorials, and developed assignments and exams. I co-instructed ROB313 in the first two years of its offering (2019, 2020) and I was the sole instructor in 2021, with an enrollment of 55 students.

Details about ROB313 can be found in the syllabus. The course offers a strong focus on fundamentals rather than covering a breadth of materials. It emphasizes the development of core skills that are required for students to continue self-guided learning beyond the course which I believe is particularly important given the rapid rate of development in the area of machine learning and related topics.

I have included my lecture notes covering an introduction to probabilistic models and Gaussian processes. These are conceptually challenging topics for third-year undergraduate students who had found these notes to be extremely helpful alongside the delivery of lectures, particularly following the transition to online learning. The notes rely heavily on visuals and examples, something that I have found to be lacking in other introductory material on this topic and which poses a barrier to entry for students at the undergraduate level.

Teaching assistant positions

- Teaching assistant, Computational Optimization (AER1415)

Graduate course, University of Toronto.

Spring 2017. - Teaching assistant, Space Systems Design (AER407)

Year 4 undergradute course, University of Toronto.

Fall 2015, 2016, 2017, 2018, 2019. - Teaching assistant, Kinematics & Dynamics of Machines (MIE301)

Year 3 undergradute course, University of Toronto.

Fall 2015, 2016. - Teaching assistant, Praxis I/II (ESC101/102)

Year 1 undergradute courses, University of Toronto.

Fall 2015, Spring 2016, Fall 2016. - Teaching assistant, Engineering Strategies and Practice I/II (APS112/113)

Year 1 undergradute courses, University of Toronto.

Fall 2015, Spring 2016.

Engineering

Machine learning library for Pratt & Whitney Canada

For four years I was the lead developer of a machine learning library used in ongoing

jet-engine development programs at Pratt & Whitney Canada.

This library provides capabilities for

machine learning,

Bayesian optimization,

asset health monitoring,

visualization, and

uncertainty quantification.

AeroVelo Atlas Human-Powered Helicopter

We developed a human-powered helicopter that won the Igor I. Sikorsky prize;

one of the largest monetary prize in aviation history which had stood unclaimed for 33 years.

I led the design, optimization and construction of the massive 20m x 20m x 4m helicopter structure which is currently on permanent display at various museums across the United States:

half of the helicopter is on display at the American Helicopter Museum in Philadelphia,

one quarter is on display at the Aerospace Museum of California in Sacramento, and

one quarter is on display at the New England Air Museum in Connecticut.

I was also a test pilot for the aircraft and I hold the FAI world endurance record for human-powered helicopter flight.

Checkout

the short video of us winning the Sikorsky prize,

the short video of my record-breaking endurance flight, as well as

our project page.

AeroVelo Eta Speedbike

Developed a high-speed Human-Powered land vehicle that travelled at 144.2 km/hr (89.6 mph) on a flat road to break the world land-speed record.

For my undergraduate thesis, I developed a practical aerodynamic shape optimization process to design Eta’s highly laminar flow body (see my blog post).

I also conducted other extensive research projects including structural and aero-structural optimization to design the vehicle’s frame and wheels, and was one of the vehicle’s test pilots.

Checkout

the short video of our record-breaking run, and

our project page.